Monday, March 29, 2010

What Ozone Hole?

As a result of now being able to make movies from gridded data, I've been going through the ISCCP data that comes with the Climate Scientist Starter Kit. A movie of the ozone data is provided above.

What surprised me was the amount of ozone over Antarctica was equal to or greater than the amount of ozone over nearly the entire rest of the world. Only the Arctic consistently had more ozone (and it has quite a bit of ozone). This was true from the beginning of the movie (July, 1983) to the end (June, 2008).

Which makes me wonder why we've never heard of of an ozone hole over Africa, or South America, or India, all of which always have less ozone than Antarctica.

Now, I'm hardly an ozone expert. So if anyone out there would like to enlighten me on why this all makes sense, please feel free to do so. Because right now I'm wondering if the Ozone Hole is as big a hoax as Global Warming. Tips on why the Arctic has so much ozone are also welcome.

P.S.

If you watch the video all the way through, you get to see what happens when a satellite sensor goes batty.

Sunday, March 28, 2010

Aqua Satellite Project, Update 5 Released

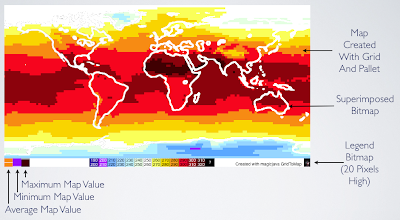

Movie made from GridToMap images.

Update 5 for the Aqua Satellite Project is ready. You can download it here. The big item is the new

GridToMap program which lets you create bitmap images from gridded data.

GridToMap takes various pieces of input data and creates an output bitmap. The inputs are:• Climate data for the entire world in 2.5 by 2.5 degree gridded format. This is a .cvs file with 1 optional header row and 3 required header columns.

• A text pallet file describing which colors get assigned to which ranges of data.

• A legend bit map that can display whatever information you want, but is intended to show the image's legend. The legend bitmap must be 20 pixels high and the same width as the main map image.

• An optional superimpose bitmap that will be laid over the map. The superimpose image must be the same width and height as the map (not counting the 20 pixels for the legend).

• The map can begin at the Prime Meridian or the International Date Line.

• Optionally, average, minimum, and maximum values for the map can be displayed as thumbnails in the left 60 pixels of the legend.

There are three related scripts:

grid_to_map, grid_to_movie, and grid_to_movie_with_filename. All three of these scripts scan immediate subdirectories looking for .csv files and run GridToMap against any they find. They differ only in how they name the output files. grid_to_map simply appends ".bmp" to the new filename. grid_to_movie uses "movie" with a sequence number and ".bmp". grid_to_movie_with_filename prepends "movie", a sequence number, three under-bars, the filename, the sequence number (again) and ".bmp".Sample grids, pallets, legends, and overlays are included with the download in the GridToMap folder. For additional details, see the version log that comes with the download.

Friday, March 26, 2010

Tuesday, March 23, 2010

Magicjava Climate Data Announced

The first beta release of Magicjava Climate Data has been made available. The data is daily and monthly averages for AMSU channel 5 for January, 2009 through December, 2009 in .csv format. You can get the data here.

Sunday, March 21, 2010

Aqua Satellite Project, Update 4 Released

Update 4 for the Aqua Satellite Project is ready. You can download it here. I'd have to say the big item in this release is the QA. But another big item is the new MapToGrid program.

MapToGrid

The MapToGrid program is for converting information in a black and white bitmap image to a 2.5 by 2.5 degree equal area grid that represents the Earth. An example image is shown to the left. It has water drawn in black and land draw in white. MapToGrid can take such information and transform it into C code, Javascript code, XML, or text. Other programs can then use this gridded information as a lookup table.

A screen shoot of a Javascript program that reads grid information and draws the Earth's water and land on an HTML page is shown below.

MapToGrid is the first of several programs that, together, will allow the creation and display of gridded satellite data.

The command line help text for the MapToGrid program describes the details of using the program and is provided below.

There are sample bitmaps suitable for using with MapToGrid in the MapToGrid folder.

QA

A QA scrub was given to nearly all the existing programs (AMSUNormalize still needs to be done). Several major bugs were fixed and some new features added.

One of the new features is the the saving off of scans that don't pass NASA QA during an AMSUExtract run. You can take these rejected scans and run them through AMSUSummary just like the regular scans. A screen shot of a graph of rejected scans that has been summarized by AMSUSummary is shown below.

Another new feature is the -FLOOR switch added to AMSUExtract. This option lets you specify a lower value for scans. Any value below the floor is rejected. When using the -FLOOR switch you don't need to specify a value. In this case the value defaults to 150 K (-123.15° C, -189.67° F). The -FLOOR switch replaces the -q switch, which has been removed.

Yet another new feature added due to the QA was AMSUSummary printing out lower third and upper third averages for data rather than minimum and maximum values. This removes a lot of the noise that was created by the minimum and maximum feature. The maximum and minimum features have been removed.

A previous post showed a graph of the lower and upper averages that looked rather blocky. This has been fixed and you can use these features with full confidence.

Change Log

So those are the big items. Here's the text of the change log for full details:

The following improvements were made for Update 4:

*) Added MapToGrid program that converts a map image containing only the colors black and

white into grid information.

The map image must be a 32-bits-per-pixel, 720 by 360 pixel bitmap (.BMP) image. This image

will be treated as a map of the entire world.

The grid information will be a 2.5 by 2.5 degree equal area grid which indicates the

percentage of white pixels in each grid.

The output grid can be formatted as C code, JavaScript code, XML, or text. The default is

text.

Includes command line help text.

*) Updated AMSUExtract program to send data that does not pass NASA's QA to stderr. Updated

amsu_extract script to handle this option. It sends stderr files to a file with the same

name as the regular file, but with the text "_Rejected" just before the file type suffix.

*) Added -FLOOR switch to AMSUExtract. The switch takes an optional value for degrees Kelvin.

Any scan below this value is sent to stderr rather than stdout. The value for -FLOOR defaults

to 150 K (-123.15° C, -189.67° F)

*) Removed -q switch from AMSUExtract. Limb adjustment checks are no longer done by this program.

NASA QA checks are done automatically now, as described above.

*) Default tolerance for Limb Check in AMSUQA raised from 0.5 to 1.0. Channels 12 and 19 added

to the list of channels that no limb check is performed on. Miscellaneous bugs in limb check

values fixed.

*) Modified AMSUExtract to report daily and monthly lower and upper 1/3 averages rather than

minimum and maximum values.

*) QAed AMSUExtract and AMSUSummary, fixing several major bugs.

*) MonthlyData and MonthlyDataMap have been refactored to a more generic ColumnData and

RowDataMap. These new classes are templates. The number of columns, ID type, and data type

are parameterized.

*) Added the ability to retrieve upper and lower averages to ColumnData and RowDataMap

classes.

*) Added avg and sum scripts. These scripts take a file name and a column number and return

the average or sum of that column. These are Unix scrips, requiring Unix utilities like cut,

grep, and awk. These scripts are not and will never be part of the main workflow, they are

intended as debugging aids. These new scripts are located in the Scripts folder. See the

comments inside the scripts for details on using them.

MapToGrid

The MapToGrid program is for converting information in a black and white bitmap image to a 2.5 by 2.5 degree equal area grid that represents the Earth. An example image is shown to the left. It has water drawn in black and land draw in white. MapToGrid can take such information and transform it into C code, Javascript code, XML, or text. Other programs can then use this gridded information as a lookup table.

A screen shoot of a Javascript program that reads grid information and draws the Earth's water and land on an HTML page is shown below.

Javascript HTML image created using Draw2D and MapToGrid

MapToGrid is the first of several programs that, together, will allow the creation and display of gridded satellite data.

The command line help text for the MapToGrid program describes the details of using the program and is provided below.

MapToGrid converts a map image containing only the colors black and white into grid information. The map image must be a 32-bits-per-pixel, 720 by 360 pixel bitmap (.BMP) image. This image will be treated as a map of the entire world. The grid information will be a 2.5 by 2.5 degree equal area grid which indicates the percentage of white pixels in each grid. The output grid can be formatted as C code, JavaScript code, XML, or text. The default is text. Usage:

MapToGrid [-h] -i Map_Bitmap -o Output_FileName [-f C | JavaScript | XML | text]

h Displays this help text.

i The location the bitmap (.BMP) input image.

o The name of the output file that will store the grid information.

f The output format. Valid format arguments are C, JavaScript, XML, or text.There are sample bitmaps suitable for using with MapToGrid in the MapToGrid folder.

QA

A QA scrub was given to nearly all the existing programs (AMSUNormalize still needs to be done). Several major bugs were fixed and some new features added.

One of the new features is the the saving off of scans that don't pass NASA QA during an AMSUExtract run. You can take these rejected scans and run them through AMSUSummary just like the regular scans. A screen shot of a graph of rejected scans that has been summarized by AMSUSummary is shown below.

Another new feature is the -FLOOR switch added to AMSUExtract. This option lets you specify a lower value for scans. Any value below the floor is rejected. When using the -FLOOR switch you don't need to specify a value. In this case the value defaults to 150 K (-123.15° C, -189.67° F). The -FLOOR switch replaces the -q switch, which has been removed.

Yet another new feature added due to the QA was AMSUSummary printing out lower third and upper third averages for data rather than minimum and maximum values. This removes a lot of the noise that was created by the minimum and maximum feature. The maximum and minimum features have been removed.

A previous post showed a graph of the lower and upper averages that looked rather blocky. This has been fixed and you can use these features with full confidence.

Corrected Lower Third Averages Example

Change Log

So those are the big items. Here's the text of the change log for full details:

The following improvements were made for Update 4:

*) Added MapToGrid program that converts a map image containing only the colors black and

white into grid information.

The map image must be a 32-bits-per-pixel, 720 by 360 pixel bitmap (.BMP) image. This image

will be treated as a map of the entire world.

The grid information will be a 2.5 by 2.5 degree equal area grid which indicates the

percentage of white pixels in each grid.

The output grid can be formatted as C code, JavaScript code, XML, or text. The default is

text.

Includes command line help text.

*) Updated AMSUExtract program to send data that does not pass NASA's QA to stderr. Updated

amsu_extract script to handle this option. It sends stderr files to a file with the same

name as the regular file, but with the text "_Rejected" just before the file type suffix.

*) Added -FLOOR switch to AMSUExtract. The switch takes an optional value for degrees Kelvin.

Any scan below this value is sent to stderr rather than stdout. The value for -FLOOR defaults

to 150 K (-123.15° C, -189.67° F)

*) Removed -q switch from AMSUExtract. Limb adjustment checks are no longer done by this program.

NASA QA checks are done automatically now, as described above.

*) Default tolerance for Limb Check in AMSUQA raised from 0.5 to 1.0. Channels 12 and 19 added

to the list of channels that no limb check is performed on. Miscellaneous bugs in limb check

values fixed.

*) Modified AMSUExtract to report daily and monthly lower and upper 1/3 averages rather than

minimum and maximum values.

*) QAed AMSUExtract and AMSUSummary, fixing several major bugs.

*) MonthlyData and MonthlyDataMap have been refactored to a more generic ColumnData and

RowDataMap. These new classes are templates. The number of columns, ID type, and data type

are parameterized.

*) Added the ability to retrieve upper and lower averages to ColumnData and RowDataMap

classes.

*) Added avg and sum scripts. These scripts take a file name and a column number and return

the average or sum of that column. These are Unix scrips, requiring Unix utilities like cut,

grep, and awk. These scripts are not and will never be part of the main workflow, they are

intended as debugging aids. These new scripts are located in the Scripts folder. See the

comments inside the scripts for details on using them.

Results Of Aqua Satellite Project QA Checks

The big QA session for the Aqua Satellite project is done. The results are there were some major bugs in the code. With these bugs fixed, the data from NASA looks they way you'd expect it to. The unusual readings occurring for each footprint mentioned in this post are still there, but they're not enough to throw off the averages.

Major Bug Fixes

We'll take a look at all this data, but first, here's a list of the major bugs that were fixed:

● The Limb Check had values that were too small for some of the footprints in its lookup table. This is what was causing entire footprints to be dropped from the old QA checks.

● A subtle bug in the gnu C++ template generator was generating integers rather than floating point variables when working with floating point data. This was causing the decimal places to be chopped off, throwing off things like average values. This was fixed by getting rid of the offending templates and replacing them with a series of overloaded functions.

● The order in which I was grabbing data for daily and monthly summaries was wrong, causing data for one footprint to be displayed as another footprint. This has been fixed.

● The concept of daily and monthly minimum and maximum values produced by AMSUSummary was and is fundamentally flawed. For reasons why, see my post on Noise. Therefore, the concept has been replaced with averages for the lower third of the data and the upper third of the data. There's enough data that goes into these calculations that noise shouldn't be a problem.

What The Data Looks Like Now

The data displayed here covers the range of January, 2008, and January, 2009 through January, 2010. This is 14 months total of data. Letting the pictures speak for themselves:

The upper and lower values look a little blocky. So I'll do some more QA on those. I also still need to QA AMSUNormalize. However, none of those things are enough to prevent this code from being released as part of Update 4. Update 4 will be released later today.

Previous Posts In This Series

QAing AMSUExtract

What's Wrong With This Picture?

Major Bug Fixes

We'll take a look at all this data, but first, here's a list of the major bugs that were fixed:

● The Limb Check had values that were too small for some of the footprints in its lookup table. This is what was causing entire footprints to be dropped from the old QA checks.

● A subtle bug in the gnu C++ template generator was generating integers rather than floating point variables when working with floating point data. This was causing the decimal places to be chopped off, throwing off things like average values. This was fixed by getting rid of the offending templates and replacing them with a series of overloaded functions.

● The order in which I was grabbing data for daily and monthly summaries was wrong, causing data for one footprint to be displayed as another footprint. This has been fixed.

● The concept of daily and monthly minimum and maximum values produced by AMSUSummary was and is fundamentally flawed. For reasons why, see my post on Noise. Therefore, the concept has been replaced with averages for the lower third of the data and the upper third of the data. There's enough data that goes into these calculations that noise shouldn't be a problem.

What The Data Looks Like Now

The data displayed here covers the range of January, 2008, and January, 2009 through January, 2010. This is 14 months total of data. Letting the pictures speak for themselves:

Monthly Averages, By Footprint

Monthly Averages, By Date

Monthly Lower Third Averages, By Footprint

Monthly Lower Third Averages, By Date

Monthly Upper Third Averages, By Footprint

Monthly Upper Third Averages, By Date

Daily Averages, By Footprint

Daily Averages, By Date

Daily Lower Third Averages, By Footprint

Daily Lower Third Averages, By Date

Daily Upper Third Averages, By Footprint

Daily Upper Third Averages, By Date

The upper and lower values look a little blocky. So I'll do some more QA on those. I also still need to QA AMSUNormalize. However, none of those things are enough to prevent this code from being released as part of Update 4. Update 4 will be released later today.

Previous Posts In This Series

QAing AMSUExtract

What's Wrong With This Picture?

Saturday, March 20, 2010

One Month Of AMSU Channel 5 Data

This video shows 1 month of Aqua AMSU Channel 5 data as it was displayed during one of my debugging sessions. The video is kinda blurry due to low resolution, but don't worry, you wouldn't be able to read all the data scrolling by even at HD resolution. Enjoy :)

Update:

Here's a screen shot of the end of the video in better resolution so you can see the data is nothing but the numeric readings from the channel.

Friday, March 19, 2010

National Snow And Ice Data Center Releases AMSR-E Satellite Surface Temperature Data

I just got this in the mail late yesterday. The National Snow And Ice Data Center (NSIDC) has just released several new climate products, including minimum and maximum air surface temperatures.

Here's the e-mail:

And since the AMSR-E used to gather this data is part of the Aqua satellite, I can fold in studying the new data with the work on the satellite.

So expect to hear more about this in the near future.

Here's the e-mail:

NSIDC is pleased to announce the release of the Daily Global Land Surface Parameters Derived from AMSR-E data set. This data set contains satellite-retrieved geophysical parameters generated from the Advanced Microwave Scanning Radiometer - Earth Observing System (AMSR-E) instrument on the National Aeronautics and Space Administration (NASA) Aqua satellite. Parameters include:

-Air temperature minima and maxima at approximately 2 meters in height

-Fractional cover of open water on land

-Vegetation canopy microwave transmittance

-Surface soil moisture at less than or equal to 2 centimeters soil depth

-Integrated water vapor content of the intervening atmosphere for the total column

The daily parameter retrievals extend from 19 June 2002 through 31 December 2008. The global retrievals were derived over land for non-precipitating, non-snow, and non-ice covered conditions. The primary input data were daily AMSR-E dual polarized multi-frequency, ascending and descending overpass brightness temperature data.

For more information regarding this data set, please see http://nsidc.org/data/nsidc-0451.html.This is pretty timely. There's several simultaneous attempts going on right now to better understand Urban Heat Islands (UHI). The minima/maxima temperatures at 6 meters should be particularly relevant to these studies. A signature of UHI is the night time temperatures go up, while the day time temperatures stay about the same. This new data will give us the tools we need to look for UHI directly on a global scale.

And since the AMSR-E used to gather this data is part of the Aqua satellite, I can fold in studying the new data with the work on the satellite.

So expect to hear more about this in the near future.

Thursday, March 18, 2010

QAing AMSUExtract

In A NutShell

So, I've been QAing AMSUExtract the last couple days. The end results is that several bugs were found and fixed in my code. But even with these fixes, a lot of data was still failing limb validation. Even after increasing the limb validation tolerance from the original 0.5 to 10.0 (giving over 100 degrees tolerance in some cases), there were still more failures than I would consider acceptable.

This told me that having a limb validation in AMSUExtract was pointless. To get a decent number of records to pass this QA check, I'd need to make the check so loose that nearly any imaginable value would pass.

So limb validation, the -q switch of AMSUExtract, has been taken out. It's been replaced with a -FLOOR switch that lets you set a lower bound for a valid temperature. The default lower bound when you use -FLOOR is 150 degrees K (-123.15° C, -189.67° F), but you can pass any numeric value you want. Scans that are marked as bad by NASA or that fail the optional -FLOOR check are the only ones that will now fail in AMSUExtract.

Limb vaidation is still available as an option for the AMSUQA program.

I want to thank Malaga View for suggesting the feature to save not only scans that pass QA, but also scans that fail QA. This feature is now part of AMSUExtract and was very useful for this QA check. BTW, should anyone else have ideas for features, please feel free to suggest them. If I have the time, I'll add them.

The end result of all this is AMSUExtract is now working and has new features to boot. This will be released in Update 4. Now I move on to QAing AMSUSummary.

The Painful Details

amsu_extract -extension C5F1-30_QA_TEST -c 5 -f 1-30 -q

This extracts channel 5, footprints 1 through 30 and performs QA checks on them. The -extension argument tells the script how to name the output file. In this case the output extract file will be named 200912_extract_C5F1-30_QA_TEST.csv, where 200912 is the name of the folder storing the monthly data. This file is created in the same directory from which the script is run.

The version of AMSUExtract called by the amsu_extract script was the same as the one in Update 3 with one exception: scans that fail QA are sent to stderr. The amsu_extract script, in turn, saves stderr to a separate file.

References:

Download ncdump

Download HDFView

So, I've been QAing AMSUExtract the last couple days. The end results is that several bugs were found and fixed in my code. But even with these fixes, a lot of data was still failing limb validation. Even after increasing the limb validation tolerance from the original 0.5 to 10.0 (giving over 100 degrees tolerance in some cases), there were still more failures than I would consider acceptable.

This told me that having a limb validation in AMSUExtract was pointless. To get a decent number of records to pass this QA check, I'd need to make the check so loose that nearly any imaginable value would pass.

So limb validation, the -q switch of AMSUExtract, has been taken out. It's been replaced with a -FLOOR switch that lets you set a lower bound for a valid temperature. The default lower bound when you use -FLOOR is 150 degrees K (-123.15° C, -189.67° F), but you can pass any numeric value you want. Scans that are marked as bad by NASA or that fail the optional -FLOOR check are the only ones that will now fail in AMSUExtract.

Limb vaidation is still available as an option for the AMSUQA program.

I want to thank Malaga View for suggesting the feature to save not only scans that pass QA, but also scans that fail QA. This feature is now part of AMSUExtract and was very useful for this QA check. BTW, should anyone else have ideas for features, please feel free to suggest them. If I have the time, I'll add them.

The end result of all this is AMSUExtract is now working and has new features to boot. This will be released in Update 4. Now I move on to QAing AMSUSummary.

Final Results With -FLOOR switch.

The file is clean like this all the way through.

The Painful Details

The part of this post can be skipped. It's the details on how the QA was carried out. It's here for people who just love to read about QA procedures on blogs or who may need to know the details for something they're doing on their own.

Setup For QA

I setup the QA runs by removing all but one folder for the directory I was working in. That folder contained .hdf files for a single month. That folder, in turn, contained a text folder that contained the text extract files created by ncdump from the .hdf files.

As always, ncdump was run with no command line arguments, just the file name of the file being converted.

You can download ncdump from here.

Running Release 3 Version Of AMSUExtract

The amsu_extract script was used to extract data from all the files for the month. The exact command line was:amsu_extract -extension C5F1-30_QA_TEST -c 5 -f 1-30 -q

This extracts channel 5, footprints 1 through 30 and performs QA checks on them. The -extension argument tells the script how to name the output file. In this case the output extract file will be named 200912_extract_C5F1-30_QA_TEST.csv, where 200912 is the name of the folder storing the monthly data. This file is created in the same directory from which the script is run.

The version of AMSUExtract called by the amsu_extract script was the same as the one in Update 3 with one exception: scans that fail QA are sent to stderr. The amsu_extract script, in turn, saves stderr to a separate file.

Results Of Running Release 3 Version Of AMSUExtract

Step 1: Check Values Being Extracted

The first thing to do is verify that AMSUExtract is pulling the correct data. To do this, use HDFView and open up the original .hdf files downloaded from NASA. You can download HDFView from here.

Use the File/Open command to open the .hdf files you want to look at. Use the tree control on the left to navigate to brightness_temp. Double click on brightness_temp. At the to of the grid window that opens up, use the page scroller to move to page 4. Page 4 is the data for channel 5, the numbering starts at zero.

You'll notice the rows in the window are numbered 0-44. These are the scan lines. The columns are numbered 0-29. These are the footprints.

Open up the extract file (200912_extract_C5F1-30_QA_TEST.csv in my case) in a text editor. Visually compare the values in the extract file to the values in HDFView. You don't have to check every value, but you want to check enough cases to convince yourself the correct values are being pulled.

Step 2: Compare File Sizes Of Passed And Failed Data Files

Checking the file sizes of the passed and failed data shows that the failed file is about 2/3s the size of the passed file. Now, a fair portion of that is header information, but most of it is actually failed data. This means a lot of data is failing QA.

Step 3: Look At Failed Data:

Opening up the failed data file, I saw a lot of data that looked reasonable to me. So I was failing data that should have passed.

To fix this problem, I tracked down a couple of bugs in the QA checks, expanded the number of footprints that don't get a limb validation check, and increased the tolerance of the limb validation from 0.5 to 10.0.

Even with these changes, a lot of data was still failing. I'll talk about that in the next section.

Results Of Running Modified Version Of AMSUExtract

To try to cut down on the number of scans failing, I gradually increased the tolerance. Eventually, I had it all the way up to 10.0 and still had more records failing than I expected. Screen shots of running with a 10.0 tolerance are below.

The error file looks a lot better, but further down the file there are still quite a few scans listed as failing.

At this point I realized it was hopeless to try and salvage the concept of running a limb validation in the extract. So I took it out and replaced it with the -FLOOR option.

References:

Download ncdump

Download HDFView

Tuesday, March 16, 2010

What's Wrong With This Picture?

Crazy Data From NASA Or Bugs In My Programs?

While I'm waiting to hear from NASA, I've started doing a QA check both of my code and the Aqua Satellite AMSU data. The above picture shows an example of some of the things I've come across. The picture shows NASA's AMSU channel 5 data for every day in January, 2008, and January, 2009 through January, 2010 for all 30 footprints (14 months total). The view along the X axis is by footprint, with an average at the end. The data was generated by my AMSUSummary program and displayed in Apple Numbers.

You can see the obvious problems with the data. Channels 17 and 18 are just whacked. Channels 25 through 30 go in the wrong direction, and channels 1 through 6 have too high a value. Compare this to the more standard picture of what such a footprint snapshot should look like, shown below.

What 30 Footprints Should Look Like.

Below is the same data again, this time the X axis represents time.

And here's daily data from December 31st, 2009. This data has been through my QA process and none of the scans for channels 24 or 25 passed QA. The QA checks to make sure none of the data is more than +/- 50% of the expected readings as defined in the literature.

So the question is, is this strange data due to bugs in my code, or is this the way the data actually looks. I've already done QA checks on my code, but for this next week, I'll be going over it again to see where the problem lies.

So for the rest of the week expect QA posts from me. In addition to checking out the code and data, these posts will give good instructions on how to use the programs I've written so far.

Monday, March 15, 2010

Request Sent To NASA For Unpublished Data And Algorithms Related To Creation Of Synthetic Channel 4 Data

As discussed in a previous post, NASA now synthesizes channel 4 data of the Aqua AMSU. Going through the steps needed to recreate this synthesis, I noticed several sets of required data are not available to the public. So I've sent off a request for this data as well as the associated (and undocumented, as far as I can tell) AMSU-A Radiative Transfer Algorithm. I've also requested information regarding the depth of the atmosphere each footprint on channel 5 scans so that I can get back to work on recreating the UAH temperatures.

The request was sent a few days ago. Hopefully they'll respond. They've already sent one piece of missing data, the at-launch noise for each channel (that's the NEDTi in the formula at the top of this post). So my thanks to NASA JPL for that.

The requested data is:

● The 230000 cases used to create the values for the vectors Ai and Theta Bar i, or the values of vectors Ai and Theta Bar i themselves if the 230000 readings are no longer available.

● Documentation on how the AMSU-A Radiative Transfer Algorithm actually works.

● Atmospheric scan depth for each footprint on channel 5.

Previous Posts In This Series:

References:

NASA Responds

AIRS/AMSU/HSB Version 5 Modification of Algorithm to Account for Increased NeDT in AMSU Channel 4

The request was sent a few days ago. Hopefully they'll respond. They've already sent one piece of missing data, the at-launch noise for each channel (that's the NEDTi in the formula at the top of this post). So my thanks to NASA JPL for that.

The requested data is:

● The 230000 cases used to create the values for the vectors Ai and Theta Bar i, or the values of vectors Ai and Theta Bar i themselves if the 230000 readings are no longer available.

● Documentation on how the AMSU-A Radiative Transfer Algorithm actually works.

● Atmospheric scan depth for each footprint on channel 5.

Previous Posts In This Series:

References:

NASA Responds

AIRS/AMSU/HSB Version 5 Modification of Algorithm to Account for Increased NeDT in AMSU Channel 4

Labels:

Aqua Satellite,

NASA,

Raw AMSU Data,

Raw UAH Temperature Data

Sunday, March 14, 2010

Aqua Satellite Project, Update 3 Released

Update 3 for the Aqua Satellite Project is ready. You can download it here. It includes a new AMSUNormalize program for normalizing temperature data produced by AMSUSummary.

Additionally, a -l flag was added to AMSUExtract and AMSUSummary to pull latitude and longitude values for each footprint being pulled.

Those are the big items. For additional details on the release, see the change log.

Additionally, a -l flag was added to AMSUExtract and AMSUSummary to pull latitude and longitude values for each footprint being pulled.

Those are the big items. For additional details on the release, see the change log.

Saturday, March 13, 2010

Draw2D JavaScript Graphics Engine Released

Draw2D version 1.0 has just been released and you can get it here.

Draw2D is a JavaScript graphics engine that allows you to add dynamically created shapes to any web page. Draw2D also supports mouse events, allowing shapes to respond to user input. Like all my coding projects, the Draw2D code has been placed in the public domain.

Draw2D will serve as the graphics engine for new features available in the Climate Scientist Starter Kit, Version 2.0. More details on this will be given in future posts.

The download includes source code, several HTML example pages, and a programmer's guide in Apple Pages, Microsoft Word, and Adobe PDF formats.

I'll finish out this post with a few screen shots showing what Draw2D can do.

Points, Lines, Quadradic Curves, Cubic Curves

Triangles And Polygons

Rectangles And Round Rectangles

Ovals And Arcs

Draw2D is a JavaScript graphics engine that allows you to add dynamically created shapes to any web page. Draw2D also supports mouse events, allowing shapes to respond to user input. Like all my coding projects, the Draw2D code has been placed in the public domain.

Draw2D will serve as the graphics engine for new features available in the Climate Scientist Starter Kit, Version 2.0. More details on this will be given in future posts.

The download includes source code, several HTML example pages, and a programmer's guide in Apple Pages, Microsoft Word, and Adobe PDF formats.

I'll finish out this post with a few screen shots showing what Draw2D can do.

Points, Lines, Quadradic Curves, Cubic Curves

Triangles And Polygons

Rectangles And Round Rectangles

Ovals And Arcs

Friday, March 12, 2010

The Case For Raw Data And Source Code

There's been a bit of chatter about raw data and source code in science on a few of the climate blogs lately. The issue has tended to be framed as "raw data" vs "anomalies". I think that's a fairly poor way to frame it because anyone using anomalies can also use raw data, and vise versa.

Bottom line, I think it's extremely important for scientists to make all their raw data and computer source code available to the public. The rest of this post will present a few reasons why.

The Computer Is The Science

Computers increasingly play a central role in science. They don't just tabulate results and draw graphs. They are actually the tool used to perform measurements and experiments and to even define the physics used to conduct the experiments.

String Theory, for example, has very little relationship to the real world from an experimental point of view. Most of String Theory is code running on a computer. Climate models too are computer programs that define a physics and execute entirely on computer.

I'm not trying to make an argument over whether or not doing science on a computer is good or bad. I'm merely pointing out that it's done, and it's rather common.

Point 1: Verifiability

A consequence of having your physics defined by code and your universe defined by data is that the experiments run on a computer cannot be verified without the code and data. In order to have verifiability, a fundamental goal of science, the code and data must be available. I think this is obvious, but I want to present an example of unverifiable science that was just reveled on this blog.

The Aqua satellite has an instrument called an AMSU that scans the Earth detecting 15 different frequencies of light. Each of these detectors is called a channel. It turns out that the hardware for channel 4 failed in late 2007, and since that time NASA, who owns the Aqua satellite, has been producing the data from channel 4 not from readings detected by instruments, but from computer code and lookup data.

They actually have good reasons on why this should work. They've performed tests using their code and lookup data and the results match reality very well. The problem is, the code and lookup data to create this synthetic channel 4 data is not available outside NASA's JPL lab. This means that not only has no one from outside JPL ever verified their claims that the procedure is sound, no one outside JPL can verify those claims. We (no mater who "we" are) have to take it on good faith that the data NASA creates for channel 4 is realistic. It cannot be demonstrated to be realistic.

Clearly, this is not science. To get science back on the track towards verifiability in the modern world, open access to all data and computer code is needed.

Point 2: Data Compatibility

I think this second point applies more to climate science in particular than science as a whole. It has to do with the concept of processing temperature data into anomalies. There are very good reasons why scientists convert temperature readings into anomalies. If done with care, anomalies can be used to meaningfully compare two different sets of data, for example.

Anomalies also have the nice feature of tracking changes in temperature in a way that's not dependent on the temperature that came just before it. For example, a July anomaly of, say, +0.2 doesn't mean that July was +0.2 degrees hotter than the previous June. It means it was +0.2 degrees hotter than the July from the previous year. All by itself, this use of anomalies filters out unwanted noise from that data. We're not interested in learning if it gets hotter as we move into summer. We already know it usually does. We're interested in learning if the trend over the years is going up or down. And that's what anomalies tell us.

But it's difficult to compare data in anomaly form to data that's not in anomaly form. And most climate data, CO2, water vapor, sun spots, cosmic rays, etc., etc. is not in anomaly form. So if we have a July that's cooler than the previous July but warmer than the June just before it, the July anomaly goes down, where as the July temperature and most other climate data that's correlated to temperature, goes up.

Point 3: Errors

I'll make this last point quick, because this post is longer than I expected.

You simply cannot find errors in data that's been homogenized and processed until it no longer has it's original shape. Smoothing, averaging, and so on, wash away these errors and the person using the data doesn't know they were ever there. This doesn't mean, btw, that such processes correct the errors. They simply hide them. To verify that the data is correct, both the original data and the computer code used to process it is required.

Conclusion

So these are some of the reasons I believe it's important to make all data and computer code available to the public. I want to stress again that making raw data and code available is not an argument against using the processed data results and no code. There's no reason why you can't do both.

Bottom line, I think it's extremely important for scientists to make all their raw data and computer source code available to the public. The rest of this post will present a few reasons why.

The Computer Is The Science

Computers increasingly play a central role in science. They don't just tabulate results and draw graphs. They are actually the tool used to perform measurements and experiments and to even define the physics used to conduct the experiments.

String Theory, for example, has very little relationship to the real world from an experimental point of view. Most of String Theory is code running on a computer. Climate models too are computer programs that define a physics and execute entirely on computer.

I'm not trying to make an argument over whether or not doing science on a computer is good or bad. I'm merely pointing out that it's done, and it's rather common.

Point 1: Verifiability

A consequence of having your physics defined by code and your universe defined by data is that the experiments run on a computer cannot be verified without the code and data. In order to have verifiability, a fundamental goal of science, the code and data must be available. I think this is obvious, but I want to present an example of unverifiable science that was just reveled on this blog.

The Aqua satellite has an instrument called an AMSU that scans the Earth detecting 15 different frequencies of light. Each of these detectors is called a channel. It turns out that the hardware for channel 4 failed in late 2007, and since that time NASA, who owns the Aqua satellite, has been producing the data from channel 4 not from readings detected by instruments, but from computer code and lookup data.

They actually have good reasons on why this should work. They've performed tests using their code and lookup data and the results match reality very well. The problem is, the code and lookup data to create this synthetic channel 4 data is not available outside NASA's JPL lab. This means that not only has no one from outside JPL ever verified their claims that the procedure is sound, no one outside JPL can verify those claims. We (no mater who "we" are) have to take it on good faith that the data NASA creates for channel 4 is realistic. It cannot be demonstrated to be realistic.

Clearly, this is not science. To get science back on the track towards verifiability in the modern world, open access to all data and computer code is needed.

Point 2: Data Compatibility

I think this second point applies more to climate science in particular than science as a whole. It has to do with the concept of processing temperature data into anomalies. There are very good reasons why scientists convert temperature readings into anomalies. If done with care, anomalies can be used to meaningfully compare two different sets of data, for example.

Anomalies also have the nice feature of tracking changes in temperature in a way that's not dependent on the temperature that came just before it. For example, a July anomaly of, say, +0.2 doesn't mean that July was +0.2 degrees hotter than the previous June. It means it was +0.2 degrees hotter than the July from the previous year. All by itself, this use of anomalies filters out unwanted noise from that data. We're not interested in learning if it gets hotter as we move into summer. We already know it usually does. We're interested in learning if the trend over the years is going up or down. And that's what anomalies tell us.

But it's difficult to compare data in anomaly form to data that's not in anomaly form. And most climate data, CO2, water vapor, sun spots, cosmic rays, etc., etc. is not in anomaly form. So if we have a July that's cooler than the previous July but warmer than the June just before it, the July anomaly goes down, where as the July temperature and most other climate data that's correlated to temperature, goes up.

Point 3: Errors

I'll make this last point quick, because this post is longer than I expected.

You simply cannot find errors in data that's been homogenized and processed until it no longer has it's original shape. Smoothing, averaging, and so on, wash away these errors and the person using the data doesn't know they were ever there. This doesn't mean, btw, that such processes correct the errors. They simply hide them. To verify that the data is correct, both the original data and the computer code used to process it is required.

Conclusion

So these are some of the reasons I believe it's important to make all data and computer code available to the public. I want to stress again that making raw data and code available is not an argument against using the processed data results and no code. There's no reason why you can't do both.

Thursday, March 11, 2010

Climate Scientist Starter Kit v2.0 Coming, Part IV: Total Solar Irradiance, Sunspots, Regional Cosmic Rays

In this post we look at several new data sets being added to version 2.0 of the Climate Scientist Starter Kit: Total Solar Irradiance (TSI), Sunspots, and regional Cosmic Rays.

Total Solar Irradiance (TSI)

Total Solar Irradiance is the amount of sunshine hitting the Earth's atmosphere at any given time. Version 2.0 of the Climate Scientist Starter Kit includes monthly TSI values taken from three satellites at three different frequencies. This data extends from February, 1996 to October, 2009.

Sunspots

Version 1.5 of the Climate Scientist Starter Kit already has Sunspot Group data. Version 2.0 adds Sunspots. This data extends from January, 1749 to January, 2010.

Regional Cosmic Rays

Regional Cosmic Rays have been added to the existing Cosmic Ray data of version 1.5. With this added data, there is now at least one cosmic ray measurement for every region used by UAH when it publishes temperatures: Northern Hemisphere, Southern Hemisphere, Tropics, Northern Extra Tropics, Southern Extra Tropics, North Pole, South Pole, and the 48 U.S. continental states.

Previous Posts In This Series:

Climate Scientist Starter Kit v2.0 Coming

Climate Scientist Starter Kit v2.0 Coming, Part II: Regional Data

Climate Scientist Starter Kit v2.0 Coming, Part III: Ozone And Pressure

Total Solar Irradiance (TSI)

Total Solar Irradiance is the amount of sunshine hitting the Earth's atmosphere at any given time. Version 2.0 of the Climate Scientist Starter Kit includes monthly TSI values taken from three satellites at three different frequencies. This data extends from February, 1996 to October, 2009.

Total Solar Irradiance (TSI)

Sunspots

Version 1.5 of the Climate Scientist Starter Kit already has Sunspot Group data. Version 2.0 adds Sunspots. This data extends from January, 1749 to January, 2010.

Sunspots

Regional Cosmic Rays

Regional Cosmic Rays have been added to the existing Cosmic Ray data of version 1.5. With this added data, there is now at least one cosmic ray measurement for every region used by UAH when it publishes temperatures: Northern Hemisphere, Southern Hemisphere, Tropics, Northern Extra Tropics, Southern Extra Tropics, North Pole, South Pole, and the 48 U.S. continental states.

Thule, Greenland (North Pole Region)

Swarthmore, Pennsylvania (U.S. 48 States, Northern Extra Tropics)

Climax, Colorado (U.S. 48 States, Northern Extra Tropics)

Haleakala, Hi (Tropics)

Hauncayo, Peru (Tropics)

Hermanus, South Africa (Southern Extra Tropics)

McMurdo, Antarctica (South Pole)

South Pole (South Pole)

McMurdo, Swarthmore, South Pole, Thule, Cosmic Rays

Previous Posts In This Series:

Climate Scientist Starter Kit v2.0 Coming

Climate Scientist Starter Kit v2.0 Coming, Part II: Regional Data

Climate Scientist Starter Kit v2.0 Coming, Part III: Ozone And Pressure

Subscribe to:

Posts (Atom)