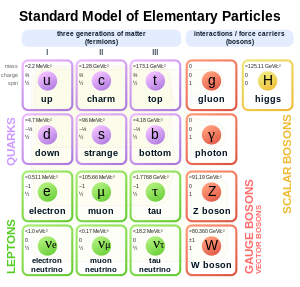

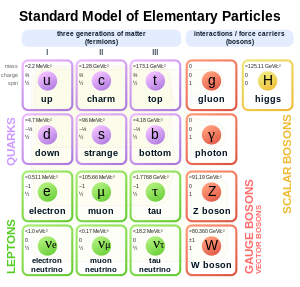

POOMA provides the ability to define custom particles that you can use in your physics simulations. Now, a "particle" in POOMA can be anything, baseballs, planets, imaginary particles, whatever you want. In this post we're going to create definitions for the particles from the standard model of quantum physics. If you want to brush up on the standard model, you can find a good description of it at

Wikipedia's Standard Model page. You can download the code being discussed in this post (just one header file) from

The POOMA Add-On Project at SourceForge. A short POOMA program that uses these custom particles is

here.

IMPORTANT NOTE: While all the main particles from the standard model are created, they are treated in a "classical" manner in the classes we create. That is, their positions, charges, velocities, and so on, are all precisely known.

Extending POOMA's Particle TemplateYou create a custom particle in POOMA by extending the Particle Template. The class you create can contain any data you'd like. You'll certainly want to store the information that applies to your custom particle type in this class. However, it also seems to be customary to include Vectors of information for all instances of your class where the information changes from particle to particle. For example, because the positions of the instances of your particles will all be different, it's customary to include a Vector in your particle class to store the positions of each particle.

This post will discuss the header file that defines the particles of the standard model. First we will look at the base class, CustomParticle, and then the derived classes.

The CustomParticle Base ClassThe CustomParticle class is the base class for all the other classes we'll define. It has a protected constructor, so it can't be instantiated. You'll need to instantiate one of the derived classes.

A description of the members is provided below:

*)

getMass() Returns the mass of the particle, measured in MeV.

*)

getCharge() Returns the charge of the particle.

*)

getSpin() Returns the spin of the particle.

*)

getName() Returns the name of the particle. Example names are Electron, Photon, and Charm Quark.

*)

getInteractsWithStrong() Returns true if the particle interacts with the strong force, or false if it doesn't.

*)

getInteractsWithElectromagnetic() Returns true if the particle interacts with the electromagnetic force, or false if it doesn't.

*)

getInteractsWithWeak() Returns true if the particle interacts with the weak force, or false if it doesn't.

*)

getInteractsWithGravity() Returns true if the particle interacts with the gravity, or false if it doesn't.

*)

getInteractsWithParticle() Pass this function a particle and it will return true if this particle interacts with it, or false if it doesn't.

*)

getLifetime() Returns the lifetime of the particle in seconds. A value of -1 means the particle lasts forever.

*)

isFermion() returns true if a particle is a fermion, false if it is not.

*)

isBoson() returns true if a particle is a boson, false if it is not.

*)

isQuark() returns true if a particle is a quark, false if it is not.

*)

isLeption() returns true if a particle is a lepton, false if it is not.

*)

DynamicArray< PointType_t, AttributeEngineTag_t > pos This is an array that will store all the positions of particles of a given type.

*)

DynamicArray< PointType_t, AttributeEngineTag_t > vel; This is an array that will store all the velocities of particles of a given type.

*)

globalCreateAndSetRandomPositionsAndVelocities() This function will create a given number of objects, all of the same particle type and set their positions and velocities to random values. Pass this function the number of particles to create, a seed for the random number generator, and true or false depending on if you want velocities to be randomized or zero.

*)

getDistance() This is a static helper function that will return the distance between two vectors. Pass it the position of two particles and it will return their distance.

The Derived Particle ClassesThere are derived particle classes for each of the three types of matter, Quarks, Leptons, and Bosons. These classes have protected constructors and cannot directly be instantiated. Instead, you'll create objects from classes that derive from these classes. A class inheritance diagram is provided below. Only the classes that inherit from Quark, Lepton, and Boson can be instantiated.

Inheritance Diagram. Click picture for larger image.

There is also an enumration called ColorCharge. This defines the color charge that quarks have. There is also a GluonColorCharge enumeration for gluons. The base class for all quarks, Quark, and the Gluon class have

getColorCharge() and

setColorCharge() methods for their ColorCharge.

The Fermion class serves as the base for the Quark and Lepton classes. Fermion has values for weak isospin, weak hypercharge, and generation. These values are set by the constructor of derived classes and can be obtained using the

getWeakIsospin(), getWeakHyperCharge(), and

getGeneration() methods.

Additionally, quarks have charm, strange, top, bottom, isospin, and baryon number values. These values are set by the derived quark classes and can be obtained with the methods

getCharm(), getStrange(), getTop(), getBottom(), getIsospin(), and

getBaryonNumber().

Similarly, leptons have leptonic electronic number, leptonic muonic number, and leptonic tauonic number values. These values are set by the derived lepton classes and can be obtained with the methods

getLeptonicElectronicNumber(), getLeptonicMuonicNumber(), and

getLeptonicTauonicNumber().

Using Custom ParticlesFinally, we want to use our custom particles in an actual POOMA program. If you haven't done so already, you can download the test program from

here and the custom particle file from

here.

The test program creates a bunch of electrons with zero velocity and places them in an imaginary box. The electrons bounce around the box driven by their own repulsive force to each other. Every time step the positions of the electrons is printed to standard out.